Reinforcement Learning

The reinforcement learning hypothesis, as put forward by Michael Littman, states that “Intelligent behavior arises from the actions of an individual seeking to maximize its received reward signals in a complex and changing world.”

At the risk of putting it too simply, reinforcement learning is an approach to machine learning that allows an agent to learn the best way to accomplish a task through trial and error. A fun-to-watch example of RL in robotics is shown below (see Geijtenbeek, et al., 2013), where synthetic (digital) bipedal creatures learn over many generations (episodes) to walk. One thing notable about this example is that the synthetic creatures used are actually running advanced physics simulations of muscle-based locomotion - the knowledge gained from these simulations can then be transferred to physical robots walking in the real world.

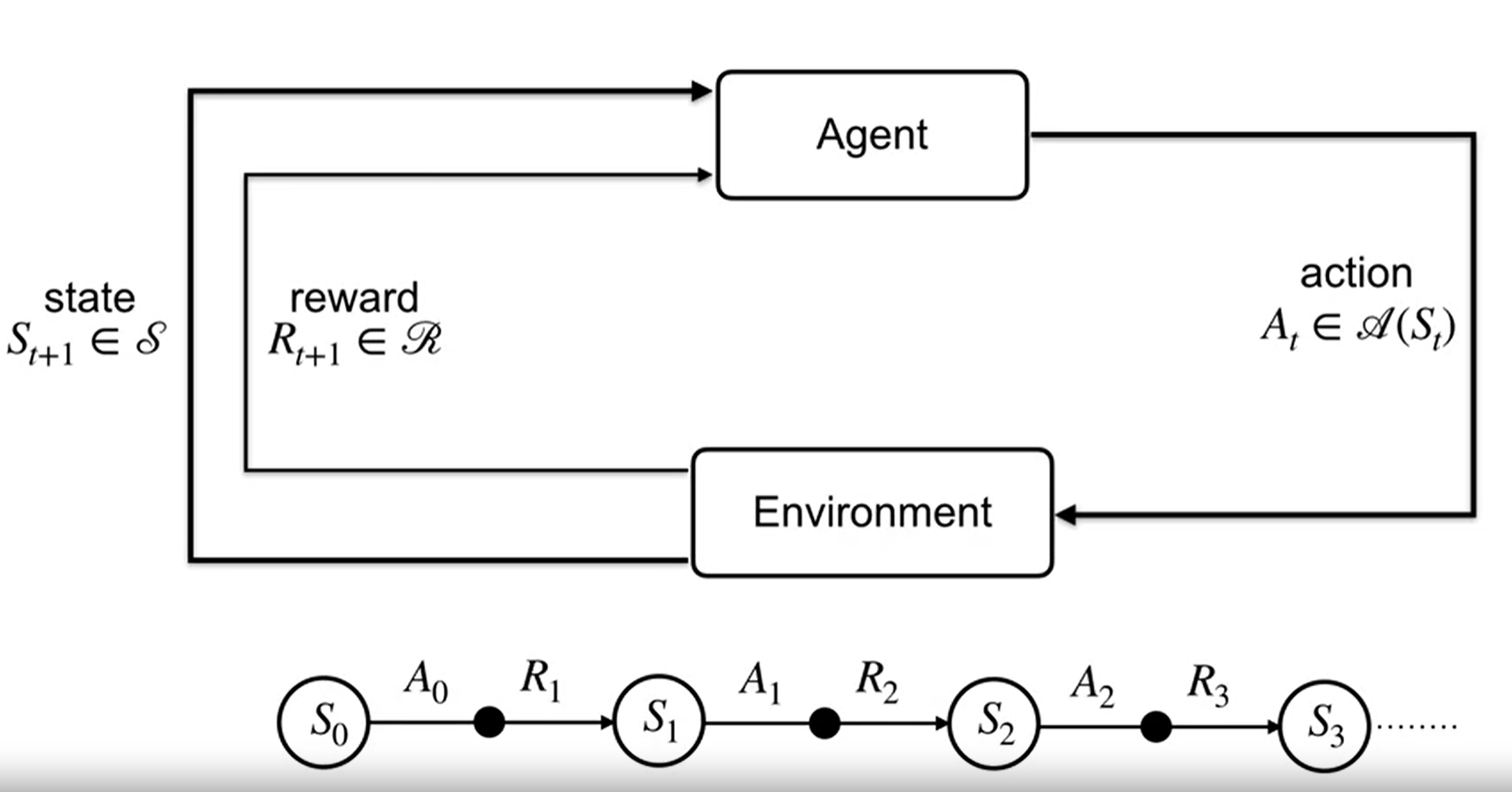

Robotics applications provide many exciting - and often amusing - examples of RL in action, but how does it work? The reinforcement learning task has been stated more generally (although not exclusively) as a Markov Decision Process, or MDP (Sutton and Barto, 2021).

In a MDP, an agent selects among a possible set of actions conditional on an observed state from the agent’s environment. The agent’s goal is to select the action that maximizes its total return over time, where the return is defined as a function of incremental rewards received after taking actions in the environment.

In this context, an environment can be anything: a flat terrain with a constant gravitational field as in the bipedal locomotion example above, a Go board as in the case of DeepMind’s AlphaGo, or a data center as in the case of DeepMind’s real-world RL-powered industrial cooling system.

Applications and case studies of RL in the water sector

A number of interesting examples of RL applicatoins in the water sector have appeared in recent literature, ranging from reservoir operations to active stormwater and wastewater operational control strategies. I’ll do my best to summarize these contributions here.

Reinforcement learning for real-time control of stormwater management systems

Reinforcement learning for RTC in stormwater management has been an active area of research lately, most notably at Prof. Jonathan Goodall’s Hydroinformatics Research Group in the Link Lab at the University of Virginia. Also worth noting is the occasional collaboration between Goodall’s team and Xylem.

-

Saliba, et al., 2020 framed the problem of real-time control (RTC) of stormwater infrastructure (retrofitted) as a RL problem. Compared against passive systems (no controls), the RL implementations reduced flooding volume by 70.5% on average. This work used a simplistic simulated environment based in SWMM5. Introduces difficulties of dealing with uncertain data inputs (imperfect state observations).

-

Wang, et al., 2020 - a simple conceptual stormwater system is simulated with SWMM5, using the

pyswmmpackage for interactive control of the model by the RL agent. A reward function designed around an expert baseline control policy is introduced to limit exploration during dry periods. The actor-critic method Deep Deterministic Policy Gradient (DDPG) is used. While several simplifying assumptions are made, this paper helped to depict the potential of applying RL to active stormwater control systems with the single objective of flood mitigation. -

Bowes, et al., 2020 continued this line of inquiry on hypothetical urban catchments, but extended the baseline comparison to include industry-standard rule-based control (RBC), as well as model predictive control with genetic algorithm optimization (MPC-GA). RL was shown to quickly outperform RBC, while performing comparably with MPC-GA but at significantly less computational expense.

-

Bowes, et al., 2022 - This work used SWMM5 simulations of real catchments in Norfolk, VA, with tidal influence and multiobjective optimization to mitigate flooding and maximize sediment capture.

Reinforcement learning for operational control of wastewater treatment systems

Several studies of the application of RL to operational aspects of wastewater treatment systems have been undertaken in the past decade. A recent effort was made by Croll, et al., (2023a, 2023b) to conduct a systematic review of the application of RL in the wastewater domain, with an emphasis on identifying the challenges and research priorities necessary to realize the potential of using RL methodologies to improve operations and maintenance of wastewater infrastructure.

- Croll, et al., 2023a provide an overview of existing RL applications for wastewater treatment control optimization found in literature. They go further by evaluating five “key challenges” and “potential paths forward” that must be addressed prior to widespread adoption, namely:

- practical RL implementation

- data management

- integration with existing process models

- building trust in empirical control strategies

-

bridging gaps in professional training

- point out that research into the potential of RL in the wastewater industry has been underway for two decades, since Yang, et al., 2004.

- They also draw some interesting parallels between the more mature applications of RL in the domain of mobile (cellular) network optimization, and suggest that similarities with the wastewater treatment control task make this a good example for those interested in applying RL in wastewater management.

- Croll, et al., 2023b - Systematic Performance Evaluation of Reinforcement Learning Algorithms Applied to Wastewater Treatment Control Optimization

Why Reinforcement Learning?

It’s reasonable to ask why RL should be used at all. Why not just program a stormwater system with predefined rules about how to operate? The reinforcement learning (RL) paradigm is unique in its focus on learning optimal actions to be taken by an agent operating a system over time. DeepMind’s data-center cooling application offers a compelling example: “…the AI [learned] to take advantage of winter conditions and produce colder-than-normal water, which reduces the energy required for cooling within the data center. Rules don’t get better over time, but AI does.”

Richard Sutton, principal investigator in the RL and AI lab at the Alberta Machine Intelligence Institute, provides a(nother) compelling statement for consideration:

I am seeking to identify general computational principles underlying what we mean by intelligence and goal-directed behavior. I start with the interaction between the intelligent agent and its environment. Goals, choices, and sources of information are all defined in terms of this interaction. In some sense it is the only thing that is real, and from it all our sense of the world is created. How is this done? How can interaction lead to better behavior, better perception, better models of the world? What are the computational issues in doing this efficiently and in realtime? These are the sort of questions that I ask in trying to understand what it means to be intelligent, to predict and influence the world, to learn, perceive, act, and think (source).

In pursuit of the goal of answering the above questions, RL benefits from applying other ML approaches to help process signals of state and reward, and to learn to approximate value and policy functions. In this way, RL is a means of systematizing ML applications, i.e., of deploying ML solutions in a manner such that they improve over time with experience Mitchell.

More thoughts to come…

References

I’ve found the following references very helpful in gaining an understanding of reinforcement learning concepts.

Primary

Indispensable references:

- Sutton and Barto maintain the authoritative text on the topic, and generously make a PDF version of their book freely available.

- University of Alberta’s reinforcement learning specialization requires a Coursera subscription, but is well worth the cost for a professional data scientist, and is a great hands-on complement to the Sutton and Barto text.

- Bertsekas, 2019. Reinforcement Learning and Optimal Control. Author’s description: The purpose of the book is to consider large and challenging multistage decision problems, which can be solved in principle by dynamic programming and optimal control, but their exact solution is computationally intractable. We discuss solution methods that rely on approximations to produce suboptimal policies with adequate performance. These methods are collectively referred to as reinforcement learning, and also by alternative names such as approximate dynamic programming, and neuro-dynamic programming.

Secondary/Application Specific

Compelling examples and research applications - many of these are discussed in context above, but are also listed here for completeness:

- UVA Hydroinformatics lab and GitHub repository

- Prof. Steve Brunton’s reinforcement learning lectures

- Google DeepMind’s autonomous data center cooling system is a great example of applied RL for industrial control systems

- Seo, et al, 2021 provide a compelling use case of applied deep reinforcement learning (DRL) to optimize pump scheduling at a wastewater treatment plant in South Korea.